How to Use Wildcard (GLOBBING) in Robots.txt [SEO TIP]

I am making a note of this for anyone that is not seeing any documentation for robots.txt and using patterns sometimes called globbing patterns. Here is a decent resource on using Robots and wildcards but to add to that which I do now you need something more powerful.

So you see this a lot e.g.

User-agent: *

Disallow: /search?s=*

But testing this within your google webmaster tools when you want to block

mysite.com/help/search?s=seo

OR

mysite.com/otherfolder/gobble/search/?s=tips

DOESNT WORK!

And you have to many folders...you need a globbing pattern to come to the rescue.

So use this pattern /**/ every time you need to represent a folder. Then you can simply block query strings more effectively with less code like this.

User-agent: *

Disallow: /**/**/?my_print

Disallow: /**/**/**/?my_print

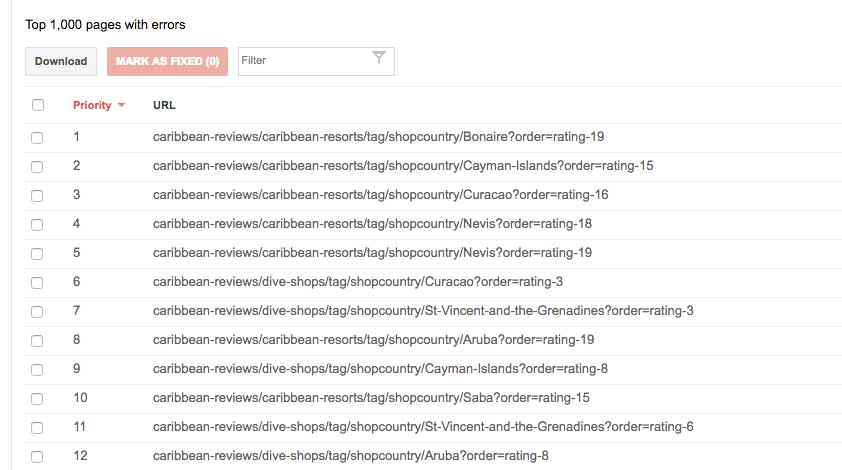

I came back to this today as I needed a pattern to match some soft 404's that I simply don't want to be judged against. Google you judgy person..

![]()

![]()

So I needed to block (and similar)

site.com/resorts/tag/shopcountry/Cayman-Islands?order=rating-18

And this worked

Disallow: /**/**/**/*?order=*

Comments

By accepting you will be accessing a service provided by a third-party external to https://cambs.eu/