Google Search Console is a powerful tool provided by Google that helps website owners, webmasters, and SEO professionals (technical SEO too) monitor and manage their website's presence in Google's search results. It offers a variety of features and insights to help optimize your website for better search engine performance.

Google Search Console is a Web App made by Google that gives webmasters and owners the ability to see how Google sees and understands their website(s). It is and has always been free to use. Data is limited to 16 months so if you want to access older data you need to start collecting the data yourself. It's not the only company to implement a Web App for owners and SEO professionals. Microsoft Bing has a very good Web Master Tools. That is quite a good point as any issues found in either of these tools help with both search engine's optimisation.

Ways To Use Google Search Console (GSC)

As a search marketing professional myself I will divulge my use cases for this tool. There are many other ways to use the tool but these are some of the ways I like to analyze websites. Search Performance, Core Web Vitals, Sitemaps, Indexation, Keywords

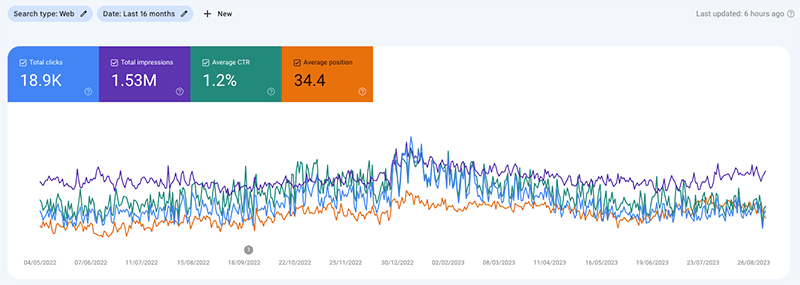

Sample 16-month default chart for a travel website

You can break down the data further by URL (Page), Keyword (Query), Country, Device and Search Appearance. This allows you to inspect performance for particular pages or keywords that may be of greater interest. If you are doing DIY SEO and you have time then I recommend spending some doing filtering and looking at the data, also the date comparison feature is very useful for seeing where traffic drops or other metric drops occur.

The main metrics of SEO

Clicks

I find that there are unreported clicks so consider the numbers as a worst case. It is worth stating that GSC historically has been quite poor and still has shortcomings like this. Clicks come from organic search as any other traffic is excluded such as PPC or direct traffic.

Key insight: Any page receiving clicks gives rise to traffic and Google really does like these pages. Use them wisely and make sure you try to get clicks for pages that don't get clicks yet show decent position. With Google's move away from their excellent Universal Analytics tool to GA4 the relevance of this click report only increases as GA4 sucks.

Impressions

If you appear in position 45, on page 5 you are unlikely to get clicks as people don't look through that far unless they are deep searching a topic. But you will get credited with the impression. Even if the page didn't get viewed. Impressions are also seen next to keywords and this is helpful for understanding how popular search terms are. This means you can get keyword volume from here even though you don't rank.

Key insight: As you add content and Google indexes, impressions will start to increase. Now you have the chance for increased traffic. You have to be in it to win it. A lack of impressions could mean a lack of content or problems with indexing.

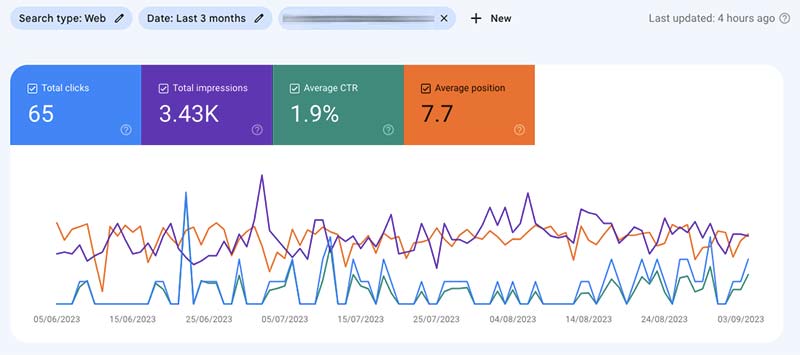

CTR Click Through Rate

The CTR has only to be used when you have a few filters on. Otherwise, the data is not very meaningful. Understand that those keywords where you are not on the first page will lower the score for CTR and skew the data.

Pay attention to this for filtered views on a particular page where the number is below 4% if you are in position 1-5. Then there is some optimisation required to how the SERPS appears which you can control.

Sample CTR

Average Position

This is useless if you are looking at a whole site but can give an indicator of trending performance for a folder. A folder can be selected by using the page filters and selecting 'contains' instead of 'exact match'. Also useful for individual keywords over time.

New content giving rise to new keywords makes this score seem worse as they come in at high positions. So filter out this metric as needed.

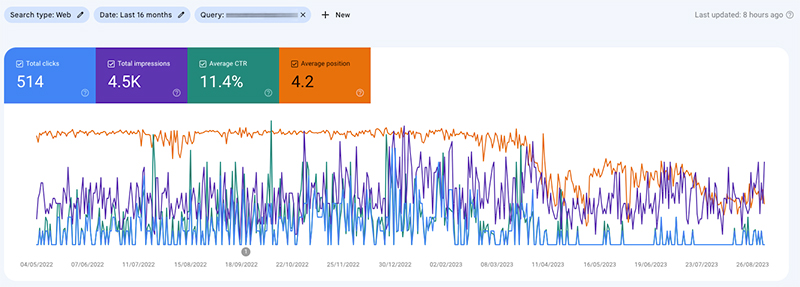

Here we can see in the following screenshot that the Average position is dropping for a particular query.

In GSC it normalises the data so lower better numbers (rank, position) are higher for all metrics i.e. 1 is higher than 10 in the chart.

So this keyword has started to drop from April 2023. This could be due to competition or an algorithm update. This is typical On Page SEO analysis task your agency or SEO freelancer should be doing for you. Notice the Google update on the 16th of September with the little grey disc on the x-axis. The drop occurs too far after in my experience to be the root cause.

Your monthly SEO reporting should be showing some of these metrics for your most important pages. Consider getting a professional SEO Dashboard built using Looker Studio which your team can access anytime to expose the most important data.

Insight into crawl ability and Google indexing

GSC also is the main tool to trust, because it is closest to the real source of the Google index. You can inspect specific pages to see how Google is interpreting them. Google Search Console also tells you when there are issues with pages. And as a webmaster GSC will email you when issues are found. Get your technical SEO team to look into each and every one. You can request pages to get removed and also inform Google of new pages which is also recommended and even when you make changes to a page.

Mobile UX

Mobile useability tool gives insight into how well your website displays on mobile devices. Google judges your pages on the 'mobile first' idea because we see that mostly this is how the web is consumed by volume. A fast-loading page on a mobile with more limited bandwidth is going to rank better (all other things being equal).

Core Web Vitals

Google has engineered automated ways (Machine Learning and AI) in which to measure how well a page loads and is UX-friendly for a user through it's Core Web Vitals. You know that really annoying mis-click you do as the page jumps as it loads and you start loading a page you didn't want.. Anyone who uses GSC knows this is particularly annoying as GSC suffers from this itself. Well that is an example of CLS or cumulative layout shift. There are free tools and many places to check your CWV scores but here in GSC you can get some insight.

Security Issues

It is easy to program a crawler to detect spam content and hacked content. So it is for GSC but don't assume it will spot all instances. Google products respect robots.txt and do not crawl directives so a hacker could hide them from Google. So GSC isn't foolproof but every little helps.

How to get started using Google Search Console

You will need to prove ownership of your website in some way so there is a validation process. This can be done via DNS TXT entry or by adding some code to the website. Both of these will only be available to an owner- did you give your website credentials away?

Here's how people use Google Search Console:

1. **Verification**: To get started, you need to verify ownership of your website or property. This typically involves adding a specific code snippet to your website's HTML or uploading a verification file to your server. Google provides several verification methods to choose from.

2. **Performance Monitoring**:

- **Search Analytics**: This feature shows you how often your site appears in Google search results, which queries drive traffic to your site, and the average position of your pages in search results. This data is crucial for understanding your website's visibility.

3. **Index Coverage**:

- **Index Status**: Check the status of your website's indexed pages and ensure that Google is able to crawl and index your content without any issues.

- **Sitemap Submission**: Submit your website's sitemap to Google to help it discover and index your pages more efficiently.

4. **URL Inspection Tool**: This tool allows you to inspect a specific URL on your website and see how Googlebot views it. It helps identify indexing issues and provides information about indexing, mobile-friendliness, and more.

5. **Mobile Usability**: Check how mobile-friendly your website is. Google considers mobile-friendliness as a ranking factor, so this feature helps you ensure that your site is accessible and usable on mobile devices.

6. **Security Issues**: Google Search Console can alert you to security issues on your website, such as malware or hacked content, which can negatively impact your search rankings and user trust.

7. **Enhancements**:

- **Structured Data**: Verify and monitor structured data (schema markup) on your site to enhance search results with rich snippets and improve click-through rates.

- **Core Web Vitals**: Access data on your site's performance related to loading, interactivity, and visual stability – factors that can impact user experience and search rankings.

8. **Links**:

- **Internal Links**: Analyze the internal linking structure of your website to improve crawlability and user navigation.

- **External Links**: View the external websites that link to your site, which can help you identify backlink opportunities and potential issues like spammy links.

9. **Manual Actions**: Check if Google has applied any manual actions to your site, such as penalties for violating Google's webmaster guidelines. If you have a manual action, you'll need to address the issue and request a review.

10. **Settings**:

- Configure settings related to your website, such as international targeting and preferred domain (www vs. non-www).

11. **Performance Optimization**: Use the insights from Search Console to make data-driven decisions for optimizing your website's content, structure, and performance for better search rankings.

12. **Monitoring Algorithm Updates**: Keep an eye on your site's performance during Google algorithm updates. This can help you identify any changes in visibility and adapt your SEO strategy accordingly.

In summary, people use Google Search Console to monitor, analyze, and optimize their websites for better visibility in Google's search results. It provides valuable insights and tools to improve SEO, resolve issues, and enhance the overall user experience on your website.

The Author Calvin Crane SEO Consultant

Cambs Digital- helping you achieve through the beauty of the web.

You can find me here on Linkedin.

"Hi My name is Calvin and I have been building websites since 1998. Things have changed a lot over the years but the need for a business to have a good website is as strong as ever. Credibility is vital, the first impression a potential customer gets is often your website. For now I simply want to tell you that you have an excellent developer/designer in myself at your disposal for creating the ideal website.

Your project will not be too big or small, I have worked for many years in London on big brands and I have been pivotal in building websites such as thomascook.de. The largest site I currently maintain and manage is in excess of 25k pages. This takes an extra level of planning especially with the URL's and SEO concerns.

You may not be aware of what is possible within a modest budget so the following is a sample of the different kinds of website I have built and can build for you."